Can We Trust Our Algorithms?

By Felix Behr

Staff Writer

24/5/2019

“Felix Behhh.”

“I’m sorry. I couldn’t catch that. Please state your name.”

I glared at my phone, the current manifestation of everything I considered wrong with the world, and again bleated “Felix Behhh,” my accent taking its usual intolerant attitude towards the letter r — or, as it would have it, “the lettah ah.”

“I’m sorry. I couldn’t catch that. Please state your name.”

“Felix Behhh!”

This went on for some time until I managed a mangled “Fee-licks Berrrrr,” which, according to the automated helpline, was my name. I had to add the five r’s to it to overcome my tendency to chop the ends off. Admittedly, this happened a few years ago and since then voice recognition has expanded beyond the West Coast’s interminably long vowels and heavily emphasized r’s. But as we become increasingly reliant on automation, we need to be aware of its biases.

The cornerstone of automation is the algorithm. An algorithm is a highly specific set of steps that produce a predictable output for any input. If, for example, you wanted to organize a set of numbers from lowest to highest, a high-level description (a description of what the code should actually do), would go:

1) If there are no numbers, there is no highest number.

2) If there are numbers, take the first and assume it’s the highest.

3) If the next number is larger, assume that number’s the highest.

4) Once the highest has been found, reiterate the process until the entire set is in order.

The important thing to note is that while these orders would be carried out by a machine, any sufficiently anal person could carry out algorithms manually. In fact, many start-ups that claim to use machine learning depend on a human labor force to execute the commands into the machine until the program learns to do it itself. With machine learning, an algorithm is fed enough sample or training data that it can perform new tasks without being explicitly programmed for it. This gives the machine the illusion of artificial intelligence. At first, though, the human labor has to pretend to be AI for the sake of marketing. What matters is that it looks like it functions with the smoothness and objectivity of a mathematically programmed machine.

What matters is that it looks like it functions with the smoothness and objectivity of a mathematically programmed machine.

Objectivity, however, is a facade. Since someone has to design the algorithm, the algorithm takes on all of the biases of its designer. In the rather banal case of me yelling at an automated helpline, the bias is that everyone sounds like the people who taught the program to recognize certain word-sound connections. I got there in the end, but just imagine if a program trained upon the rhythms of a well-spoken American was faced with a Glaswegian.

These biases make themselves known in facial recognition as well. As with voice recognition, a certain amount of training is required for facial recognition. So in a context comprised of mostly white men, like Silicon Valley, a machine will learn to recognize white men. To give it credit, it gets really, really good at recognizing the minute differences in this subgroup. But when faced with a different group, say, Asian women, the algorithm starts to guess and its accuracy falls.

A traveler uses facial recognition to check in at a self-service kiosk (Picture Credit: Delta News Hub)

It’s because of this bias towards defining a human face as a white face that in 2015 a story about Google’s racist auto-tag program broke. Google released an app that would automatically tag uploaded photos. So a picture with a car would be tagged with “car.” A black man noticed, though, that the app had tagged a picture of him and his friend as gorillas. Google apologized and said that they would work to fix it. According to an article in WIRED three years later, this “fix” involved removing the word “gorilla” as well as other primates from the app’s vocabulary. This wasn’t temporary either, as WIRED learned from a Google spokesperson that “gorilla,” “chimp,” “chimpanzee,” and “monkey” are still banned.

The solution shows the problem. It was easier for Google to simply remove the offensive terms than it was for it to solve the actual problem – that their app couldn’t differentiate black people from gorillas. A less charitable interpretation would be that they were lazy and merely sought the simplest way out. Either way, though, it shows that biases humans can ignore are difficult to deal with when they are encoded into an algorithmic system. While you would easily see that a black person is a human and not a gorilla, a system programmed purely on the bias that white people are human cannot make the necessary interpretative leap.

Biases humans can ignore are difficult to deal with when they are encoded into an algorithmic system.

The examples so far have been quite tame. An automated speaker that can’t recognize your voice is annoying. Google’s app was deeply offensive, but not directly harmful. The problem is that the rigidity of an algorithm’s bias can scale. The same logic behind these quality of life issues can also play out in societal ones.

In 2016, ProPublica, an American nonprofit newsroom that produces investigative journalism, published a piece titled “Machine Bias.” The investigation revolved around the questionable nature of a computer program that calculates the risk of a convicted person committing another crime in the future. The point of a risk assessment score, the number that the program rates the likelihood of reoffending, is to inform the justice system during its decision-making. However, the team, led by Julia Angwin, Jeff Larson, Surya Maatu, and Lauren Fisher, found that the program was exceptionally inaccurate. Only 20% of its predictions came true.

The program’s inaccuracy was due to the way it favored white defendants over black. It consistently rated black defendants at a high risk of reoffending while regarding white defendants as low risk. As with Google’s gorilla, the calculations behind these scores were fair. Arithmetic is indifferent to humanity. It was the manner in which the data was collected and framed that skewed the machine to a racial bias. The algorithm reached its conclusion by taking the answers from 137 questions and looking over the person’s criminal record. Some of these asked whether the person had been arrested before, if they carried weapons, and if they had violated their parole. Fair enough. Other questions, though, tried to predict future criminality by looking to the person’s background. These included “If you lived with both parents and they later separated, how old were you?” “How many of your friends have been arrested?” and “In your neighborhood, have some of your friends or family been crime victims?”

The racial bias arises from the latter set of questions. In America, the criminal justice system is already more than five times more likely to imprison African Americans. African Americans already live in more deprived areas. The family structure of marginalized communities is more fragile than well-off ones. This led to cases where the black defendant was imprisoned and the white received a lighter sentence or was set free, only for the latter to commit further crimes and be exonerated again by the algorithm. The algorithm didn’t create a racist system, but encoded the logic of an indirectly racist system, giving it the veneer of objectivity.

The algorithm didn’t create a racist system, but encoded the logic of an indirectly racist system, giving it the veneer of objectivity.

These misuses and biases have so far been reflective. The algorithm in the ProPublica piece unwittingly replicated a preexisting racist structure. The programmer didn’t necessarily create the software to work against black defendants, but it reflects the logic of a system that does. One could also knowingly incorporate the efficiency of an algorithm into an oppressive system.

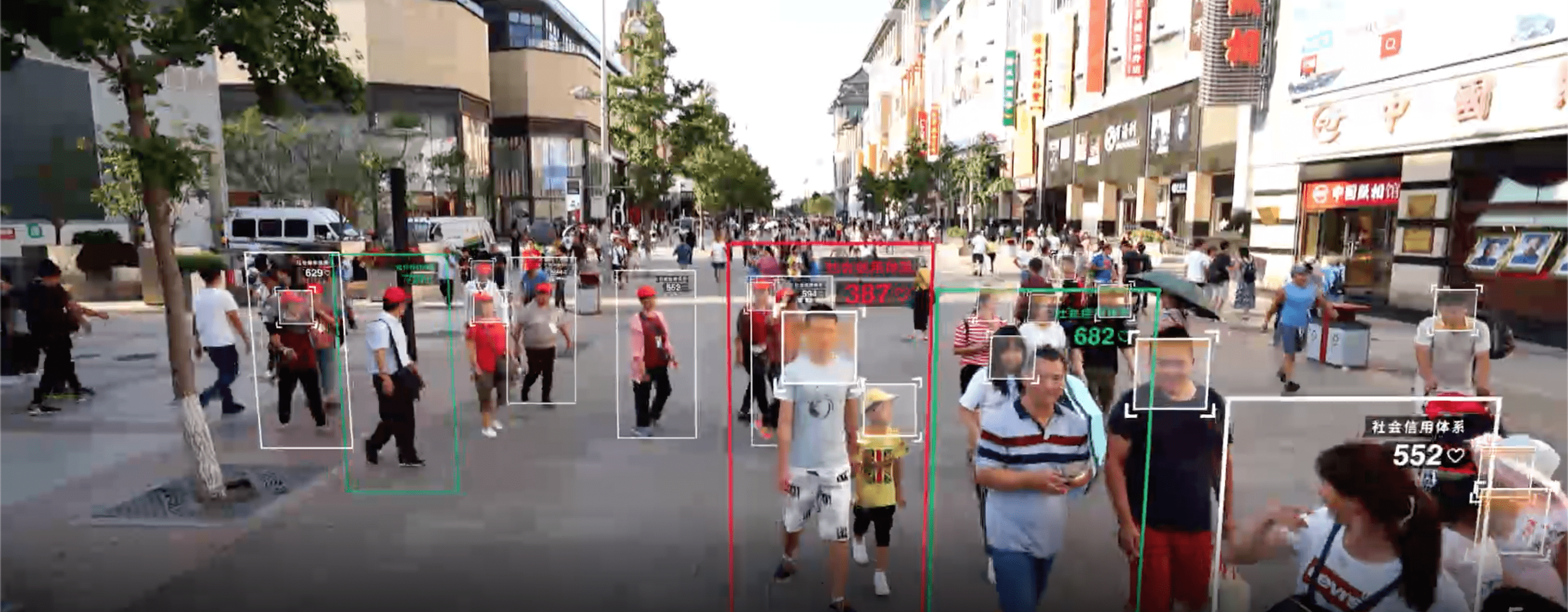

When Western media write about China’s Social Credit Systems, the reports tend to go full pathos. “China’s ‘social credit’ systems bans millions from travelling,” yells The Telegraph. WIRED whispers about “Inside China’s Vast New Experiment in Social Ranking.” MIT Tech Review asks “Who needs democracy when you have data?” While these systems are used for surveillance, their main, official purpose is to promote trust in commercial and civic life.

Of course, we have to look at who is defining what trust is – the Chinese Communist Party. Effectively, the system gives a technological basis for the Party’s desire for people to act in a certain way. However, we should look at what it does to accomplish this without immediately resorting to a caricature of a surveillance state that is drawn from our own mentality. The system’s oppressive, but it’s oppressive in its own way, not in the way of a science fiction novel written in post-war Britain.

Picture Credit: ABC News

In the West we hear rumors of a single Orwellian system, but the initiative comprises two types of credit systems, privatized commercial ones and the Social Credit system that the government is aiming to implement by 2020. Alibaba’s Sesame Credit, the largest commercial system, draws from mega-corporations’ massive supply of data to create trustworthiness scores for users who have opted into the system, based off of their payment history, online behavior, and social network. With a high enough score, people can earn benefits like not having to pay a rental deposit. A low score, especially when they are placed on a public blacklist, removes these privileges.

Punishment, as opposed to the revocation of a privilege, is not a focus of the commercial systems but of the public Social Credit system. This takes the form of blacklists comprised of people who commit acts that are not strictly speaking illegal but undesirable, like being able to pay off your debt but choosing not to. The punishments tend to bar people from doing things, taking a certain job, travelling comfortably, or sending one’s children to certain schools. Some people may gnash their teeth and cry against liberty, but this is not so different from Western stores putting people on a blacklist for return-fraud. The idea is to spread a sense of trust and social responsibility throughout society. And for the people who fit with the system it works. It’s the authoritarian version of the libertarian dreams of blockchain.

How about the people who don’t fit? In May, Human Rights Watch reported how it reverse-engineered an app used by the Integrated Joint Operations Program, the main mass surveillance system in Xinjiang, which collects data, reports suspicious activity, and prompts investigations.

Sometimes the suspicions are reasonable. If a person is using someone else’s ID, that should warrant a raised eyebrow and an inquiry. However, the list of things that the app considers suspicious extends beyond that to mismatches between a car driver and a car owner, using the back door instead of the front, and, as this sample case that was found in the app’s source code shows, exhibiting religious tendencies:

Suspicious person Zhang San, whose address is Xinjiang Urumqi, ID number 653222198502043265, phone number 18965983265. That person has repeatedly appeared in inappropriate locations, and he displays [or his clothing shows] strong religiousness.

The only people who are likely to display “strong religiousness” are the Uighurs and Kazakhs, who are Muslim and belong to the native Turkish ethnic group in Xinjiang. The Chinese government sees these groups as a threat to the state and tries to suppress expressions of their identity. This includes mass detentions and “correctional” facilities, creating a humanitarian crisis. While the program’s justification is that this is in response to terrorism and there have been scattered pockets of violence incited by separatists, the official definition of terrorism is vague enough to include any aspect of Uighur or Kazakh identity.

Atushi City Vocational Skills Education Training Service Center, a detention camp, in Xinjiang

While Western media portrays the expansion of technology into every aspect of our lives as something either out of 1984 or Brave New World, the dystopian nightmare of this algorithmic world resembles Kafka more than it does Orwell or Huxley. There are rules. But once the rules are locked inside a computer case, they become infinitely more difficult to access and alter. While a human can make the necessary interpretive leap to understand that when I scream “Behhh” I mean “Behr,” a computer will stare blankly and patiently, waiting for me to say “Behrrrrr.” After all, according to the old rules, everyone spoke with a standard American accent. These rules rule our lives.

Not every use of an algorithm will lead to a concentration camp, of course (feel free to check the weather). What should be clear, though, is that we have to think about the algorithms around us. They have entangled themselves through almost every aspect of society, running through them as ivy does along a wall. This is not wrong in itself. In each example I used, the algorithm itself was not at fault. Rather it amplified the faults already present, breaking open the cracks with all the efficiency of a machine.

The only solution to these problems is to fix society, to rectify and work against the biases that inform these algorithms. Without this societal change, no amount of transparency will suffice. Our logic has flaws. That is what we need to tackle if we want to avoid a hostile algorithmic world.